PRECIOUS HPC

The infrastructure provides both computing power through powerful processors (CPUs) and powerful graphics processors (GPUs), huge amount of RAM and massive hard disk storage capacity (order of Peta Bytes). The important aspect is that all parts as well as the infrastructure are highly scalable and upgradable while the existing 12 nodes allowing to quadruple (4x) the total processing power even without interrupting the operation of the infrastructure. The infrastructure provides all the tools to continuously monitored (from anywhere) while a logging system records both software and hardware events. The 12 nodes of the infrastructure are providing 1.26 THz (TeraHertz) computer power, 4.48TB (TeraByte) RAM and 211.86TB hard-disk storage, while there are 4 nodes which contain 2 graphics cards each one with 10752 CUDA (Compute Unified Device Architecture) cores for high-demand scientific computing applications. The GPU capabilities support demanding computations such as photorealistic graphics rendering and scientific Artificial Intelligent (AI) applications where machine learning applications are accelerated in deep-learning neural networks with tens of millions of parameters. The infrastructure has been designed and parameterized in such a way that even in the event of a failure of a node or hard drives, the applications and data are immediately functional through special protocols and the corresponding software. In the sustainability plan for the high-performance cloud computing infrastructure Precious, state of the art technologies was considered aiming to reducing energy consumption, optimal resource management, promoting the use of renewable energy sources and maintaining the sustainability of the overall Precious infrastructure. All these actions contribute to achieving more sustainable and environmentally friendly solutions for the provision of cloud computing services in precision medicine applications. A comprehensive sustainability plan considers efficiency, renewable energy, recycling, and best practices for supercomputing infrastructure resource management.

HPC processing

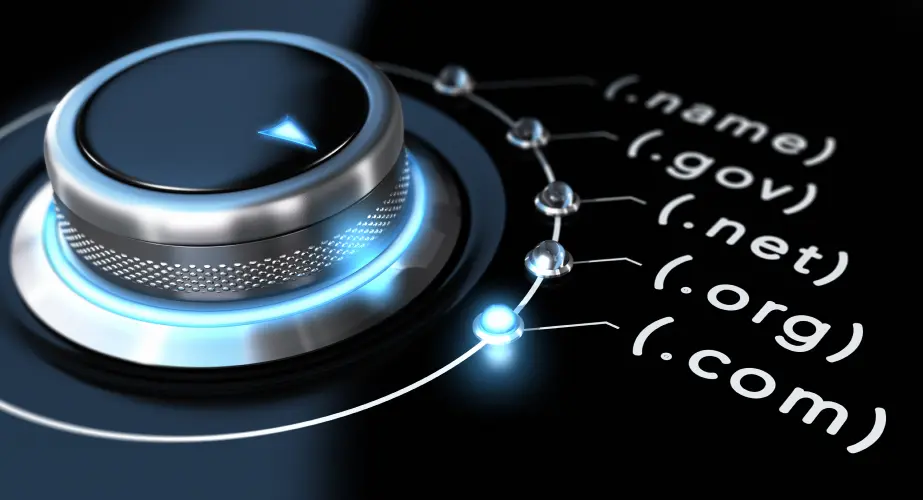

High Performance Computing (HPC)

High Performance Computing (HPC) refers to an advanced and powerful class of computing systems designed to perform complex and demanding computing tasks at very high speeds. The goal of HPC is to provide the ability to quickly solve problems that require massive computing power, such as simulations, large system analysis, big data processing, data space searches, and other computational performance tasks.

Parallel processing

HPC systems typically consist of a collection of multiple processors (CPUs) and/or graphic processing units (GPUs) working together to perform computational tasks in parallel. Parallel processing allows computing workloads to be divided into smaller chunks that can be processed simultaneously, improving overall performance.

The fields that benefit from High Performance Computing are many and varied, such as climate modeling, pharmaceutical research, aerodynamics, astrophysics, hydrodynamics, geology, biology, and many other fields that require the analysis of large and complex systems.

solve problems

In general, High Performance Computing helps to solve problems that remain unsolved by conventional computing approaches, enabling the development of science, technology and research in new directions.

Scientific research benefits significantly from High Performance Computing (HPC) in many ways. The capabilities offered by HPC allow researchers to tackle more complex, demanding and extensive scientific problems.

scientific research benefits

1. Simulations and Modeling: HPC enables the creation of large-scale simulations and modeling of complex physical, chemical, biological and mechanical systems. These simulations provide insights into real-world phenomena that would be disproportionate or impossible to observe experimentally.

2. Faster Data Analysis: Scientists dealing with massive data sets, such as those generated by particle accelerators, telescopes, or genome projects, can use HPC to process, analyze, and derive meaning from that data more efficiently way.

3. Drug Discovery and Molecular Modeling: HPC is vital in drug discovery and development. Researchers can simulate the behavior of molecules, proteins and drug interactions at the molecular level, helping to design new drugs and understand how existing ones work.

4. Climate and Weather Modeling: Climatologists use HPC to run complex climate models that simulate global weather patterns, ocean currents, and climate change scenarios. These models help understand Earth’s climate dynamics and predict future trends.

5. Astronomy and Astrophysics: HPC enables the simulation of cosmic phenomena, including the formation and evolution of galaxies.